A New System for Cooling Down Computers Could Revolutionize the Pace of Innovation | Science | Smithsonian Magazine

In 1965, Gordon Moore, a co-founder of Intel, anticipated that computing would increase in power and reduce in price tremendously. For years what later ended up being referred to as Moore’s Law proved true, as microchip processing power roughly doubled and expenses dropped every number of years. But as power increased exponentially, so did the heat produced by loading billions of transistors atop a chip the size of a fingernail.

As electrical power fulfills resistance passing through those processors it produces heat. More processors mean greater temperature levels, threatening the continued growth of computer power because as they get hotter, chips decrease in efficiency and eventually fail. There’s also an environmental expense. Those chips, and the cooling they require, feast on power with a pressing cravings. Data centers utilize approximately one percent of the world’s electrical energy. In the United States alone, they take in electricity and water for cooling roughly equivalent to that used by the entire city of Philadelphia in a year.

Now, Swiss scientists have actually published a research study in the journal Nature which says they have one option to the cooling issue. “Data centers consume a huge quantity of electricity and water as we rely more and more on this data, this usage is just going to increase,” says Elison Matioli, a professor in the Institute of Electrical Engineering at Ecole Polytechnique Fédérale de Lausanne (EPFL) who led the study. “So discovering ways to handle the dissipated heat or dissipated power is an incredibly important issue.”

Previous efforts to cool microchips have relied on metal sinks, often integrated with fans, that absorb heat and act like an exhaust system. Some information centers rely on fluid flowing through servers to draw away heat. However those systems are developed and fabricated individually and then combined with the chips. Matioli and his group have designed and produced chips and their fluid cooling systems together. In the new design, the cooling components are incorporated throughout by producing microchannels for fluid within semiconductors that spirit away the heat, save energy, and alleviate the environmental problems created by data centers.

Their work likewise might have important applications in an amazed future, helping eliminate the heat problem and minimizing the size of power converters on automobiles, solar panels and other electronics. “The proposed innovation must allow more miniaturization of electronic devices, possibly extending Moore’s Law and significantly lowering the energy intake in cooling of electronic devices,” they write.

Heat produced by chips in electronic devices has actually been a concern as far back as the 1980s, according to Yogendra Joshi, an engineering professor at Georgia Tech, who was not a part of the research study. Early microprocessors like Intel’s very first main processing system released in 1971 didn’t produce enough heat to need cooling. By the 1990s, fans and heat sinks were integrated into practically all main processing systems– the physical heart of the computer that includes the memory and computation parts– as increased power developed increased heat. Relying on metal heat sinks that draw the heat away and dissipate it through the air increases the temperature of the entire device and develops a loop that just produces more heat. “Electronics normally do not work actually well when they are hot,” Matioli adds. “So in a manner, you reduce the effectiveness of the entire electronics, which ends heating up the chip more.”

Researchers checked out microfluidics, the science of managing fluids in tiny channels, as far back as the early 1990s. Efforts increased after the U.S. Department of Defense’s Defense Advanced Research Projects Agency (DARPA) first became thinking about the technology in the late 1990s, but began to take deeper interest in 2008 as the number of heat-producing transistors on a microprocessor chip went from thousands to billions. Joshi estimates that the firm has invested $100 million on research, including moneying what it called ICECool programs at IBM and Georgia Tech beginning in 2012.

Over the years, embedding liquid cooling in chips has been checked out through 3 basic designs. The very first two styles did not bring cooling fluid into direct contact with the chip. One used a cold plate cover with microfluidic channels to cool chips. Another featured a layer of product on the back of chips to transfer heat to a fluid-cooled plate without the cover. The 3rd style, the one that Matioli and his team checked out, brings the coolant into direct contact with the chip.

Matioli’s research study builds on work by Joshi and others. In 2015, Joshi and his team reported cutting fluid channels straight into integrated circuits yielding temperatures 60 percent lower than air cooling. “Cooling innovation is absolutely going to be vital and utilizing fluids aside from air is a crucial part of having the ability to eliminate these very large heat rejection requirements put out by the computer systems,” Joshi says. “And you wish to have the coolant where the heat is being produced. The more away it is, the less efficient at an extremely high level it’s going to be.”

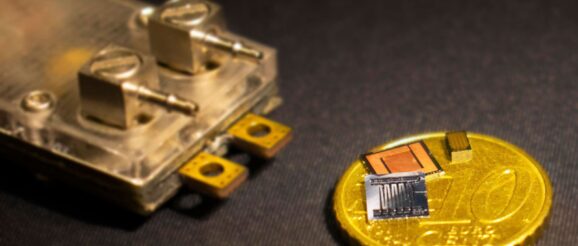

That’s what Matioli’s research advanced. To check their idea, the team developed a water-cooled chips, concerting rotating present (Air Conditioner) into direct current (DC) incorporating microchannels filled with water in the very same semiconductor substrate. The substrate they used was gallium nitride, rather than silicon, which allowed much smaller miniaturization than the typically-used silicon. The outcome, according to the paper, is cooling power as much as 50 times higher than standard designs.

The technique was finding a brand-new way to make chips so the fluid channels, varying from 20 microns (the width of a human skin cell) to 100 microns, were as near to possible as the electronics. They integrated those with large channels on the back of the chip to lower the pressure needed to make the liquid flow. “The example is it’s like our bodies,” Matioli says. “We have the larger arteries and the smaller capillaries and that’s how the whole body minimizes the pressure essential to disperse blood.”

The cooling innovation has the prospective to end up being a key part of power converters varying from little devices to electric cars. The converter Matioli’s group created pressed out more than three times the power of a normal laptop battery charger but was the size of a USB stick. He compares it to the evolution of a computer that as soon as filled a room and now suits a pocket. “We might begin thinking of the very same thing for power electronic devices in applications that go all the way from power materials to electrical cars to solar inverters for solar panels and anything associated to energy,” Matioli states. “So that opens a lot of possibilities.”

His team is getting interest from makers, but he declined to explain. To Joshi, the research study is an initial step. “There stays more work to be done in scaling up of the method, and its implementation in actual products.”

In a commentary accompanying the Nature paper, Tiwei Wei, a research scholar at Stanford University who was not a part of the research study, also said challenges stayed to implement the style, consisting of studying the durability of the gallium nitride layer and possible manufacturing concerns. However their work, he says, “is a big action towards low-cost, ultra-compact and energy-efficient cooling systems for power electronic devices.”