Innovation and Experimentation: 3 Projects from The New York Times’s Maker Day

A few times every year, a large group of New York Times employees participate in our Maker events, where they take a break from their normal jobs to work on self-directed projects and participate in workshops. These are Technology-sponsored events that provide the opportunity for many departments (including Data, Product and Design) to dedicate time to innovation, collaboration and learning.

Maker events are intended to be meeting-free to give time for participants to explore new ideas and collaborate with people they don’t normally work with. Sometimes participants take the time to address an item on their to-do list, or they build prototypes that explore design solutions for a specific problem. Sometimes participants take a break from their computers to create physical objects, like the Arduino project below or last year’s newspaper upcycling project.

What our Times colleagues work on is up to them.

We asked a couple of our colleagues to share what they worked on at our last Maker event. Here’s what they said.

Updating the API Documentation Service

By JP Robinson

For several years, Times developers published and shared specifications for our internal APIs on a hacked implementation of a LucyBot documentation site. The site had a Rube Goldberg-like architecture for updating those specs, which included a service running on App Engine Flexible that used Cloud Endpoints and API keys for authentication. When the APIs were updated, the service would check out the current specs from a GitHub repo, alter them and push them back to the repo. This kicked off a GitHub webhook to call a Google Cloud Function in a separate Google project that would attempt to restart the service hosting the docs. The hope was that an embedded git submodule would have updated with the updated spec. This last service relied on Google’s Identity-Aware Proxy to authenticate users. Not surprisingly, this rarely worked as expected and was not a sustainable solution.

I spent Maker Day adding some sanity to the project. It now runs in a single App Engine Standard service using Google Cloud Storage for persistence, and Google sign in and instance identities for authentication. Instead of LucyBot, the UI is a simple index page that lists the available docs and has a second page built with Swagger UI to visualize the documentation. Instead of taking 10–15 minutes for API docs to possibly be updated, the updates now reliably appear instantaneously.

‘What If You Could Literally Push a Button?’

By Alice Liang and Ryan Lakritz

In our non-Maker Day work, we work in the Data team to build machine learning models for different parts of The Times’s subscription business. In one of these models, we have a specific parameter that our marketing partners can change. One day, we joked that it would be funny if we had a physical knob that could take in such a value. We have been known to take jokes quite far, so we decided to make an Arduino device to do just that.

As part of Maker Day, other coworkers held a “Making Things with Arduino” workshop that we took. The workshop was crucial in helping us to set up the device, connect it to the code we wrote, and power it.

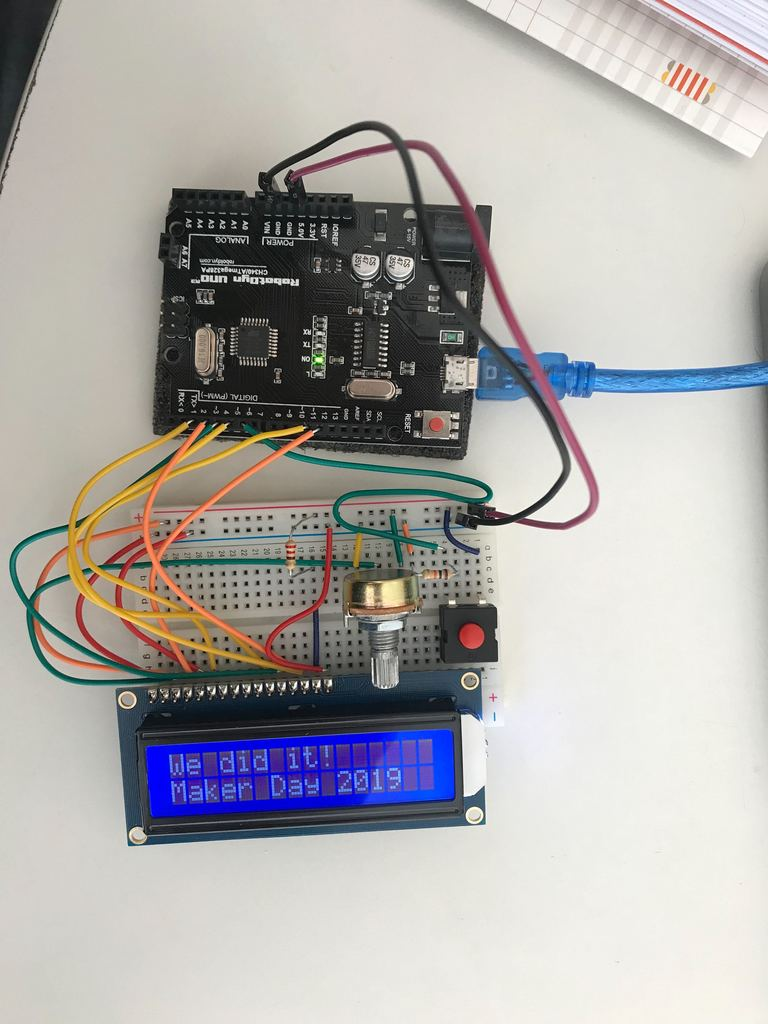

We spent most of the day connecting the wires to our breadboard and Arduino between our Panel Mount 10K potentiometer, a 16×2 LCD screen and a red on-off button.

We wrote some Arduino code based on C — the basics of which we learned on the spot — to detect any changes from our potentiometer. The code would output the changed value to the LCD screen and prompt the user to send the selected value if they were ready. Finally, we wrote code to save the given value when the red button was toggled on.

Then, we turned to Python, which we more regularly code in, to read in that value given a connection to the Arduino port. The Python code pushed the value to Google Cloud Storage, where it could be incorporated into our model — this is all hypothetical, of course.

The Arduino now sits on our desks and, while it’s not a device in regular use, it has served as a mascot to the model. The Arduino has garnered a lot of excitement from our colleagues in the subscription growth business and has been a surprisingly helpful tool when discussing the complex statistical components of the model.

We enjoyed getting away from debugging our modeling code and into a hands-on hardware project for a day. Who knows what new jokes we’ll take on next for Maker Week.

Experiments with Text-to-Speech for Times Articles

By Bill French

At The New York Times, we’re constantly looking for novel ways to increase readership and engagement. For Maker Day this year, I started with a thought: what if readers could listen to audio versions of all Times stories?

This, of course, raises a few questions: how would the recordings be produced? Would we hire voice actors to do the reading? Would such a feature help persuade occasional readers to become subscribers?

Recent advances in speech synthesis offer an answer to these questions. Google Cloud Platform has unveiled a new set of WaveNet voices, accessible via its Text-to-Speech API, that provide more natural sounding synthesized speech than the monotonous, tinny, robotic tones that we all often endure. By taking advantage of the API, I was able to write a simple Node web service that converts article body text into an equivalent spoken word audio file The audio can then be embedded as an MP3 asset on a web page and listened to from within a web browser.

The function tasked with synthesizing speech takes both raw text and speech synthesis markup language, or SSML, as input. The latter provides explicit instructions for things like how to pronounce certain words, where to insert pauses, and what to ignore so the readout sounds realistic.

Writing SSML is labor-intensive and doesn’t immediately fit into existing work streams here, so it raises more questions: who would be responsible for this markup? Would this become a new step in the editorial process? Will speech synthesis reach a high enough quality that we’ll be willing to accept the occasional mispronunciation in exchange for audio? My hunch is mispronunciations would be considered as undesirable as typos; not showstoppers, but still best to be avoided.

The Text-to-Speech API has also revealed itself to be a quick study. In late March, before Mueller Report speculation had monopolized all media outlets, the synthesis engine incorrectly pronounced “Mueller” as MYOO-ler in its renderings of articles about the special counsel. By early April, the stress and vowel articulation had changed to the true pronunciation of Robert Mueller’s last name: MUH-ler.

It’s clear this technology will soon be ready for primetime, and that we should be thoroughly exploring it now. This summer, when Maker Week rolls around, I’ll continue developing the Story Player, building an interface that allows users to create daily, individualized article playlists.

If working in a mission-driven environment, with an emphasis on work-life balance and comprehensive parental leave sounds good to you. Come work with us.We’re hiring.

Innovation and Experimentation: 3 Projects from The New York Times’s Maker Day was originally published in Times Open on Medium, where people are continuing the conversation by highlighting and responding to this story.